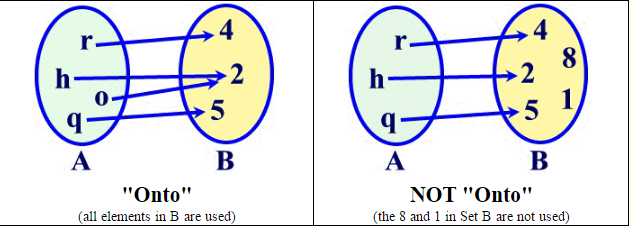

Author: Zack Weinberg, derived from Cyc’s work. To be more precise, it will not take into account the whole class – but rather the samples closest to the decision boundary, the so-called support vectors. The reason why is because SVMs are maximum-margin classifiers, which means that they attempt to generate a decision boundary that is equidistant from the two classes of data.Ī point is said to be equidistant from a set of objects if the distances between that point and each object in the set are equal.īy signing up, you consent that any information you receive can include services and special offers by email. The second is, and the third is as well.īut which one is best if you are training a Support Vector Machine? The first is not capable of adequately separating the classes. In addition, you see three decision boundaries: \(H_1\), \(H_2\) and \(H_3\). You see samples from two classes – black and white – plotted in a scatter plot, which visualizes a two-dimensional feature space. Why you cannot create multiclass SVMs natively Let’s now take a look at why this cannot be done so easily. Implementing a multiclass classifier is easy when you are using Neural networks. We neither have a multilabel classifier: we assign items into buckets, rather than attaching multiple labels onto each item and then moving them into one bucket. We clearly have no binary classifier: there are three buckets. Multiclass classification is reflected in the figure above. 3 Variants of Classification Problems in Machine Learning Multiclass classification can therefore be used in the setting where your classification dataset has more than two classes. In the multiclass case, we can assignitems into one of multiple (> 2) buckets in the multilabel case, we can assign multiple labels to one instance. The other two cases – multiclass and multilabel classification, are different. This can be implemented with most machine learning algorithms. In the binary case, there are only two buckets – and hence two categories.

MAPS ONTO VS ONE TO ONE HOW TO

Those approaches include examples that illustrate step-by-step how to create them with the Scikit-learn machine learning library.

This is followed by two approaches for creating multiclass SVMs anyway: tricks, essentially – the one-vs-rest and one-vs-one classifiers. That is, why they are binary classifiers and binary classifiers only. It serves as a brief recap, and gives us the necessary context for the rest of the article.Īfter introducing multiclass classification, we will take a look at why it is not possible to create multiclass SVMs natively. First, we’ll look at multiclass classification in general. Both involve the utilization of multiple binary SVM classifiers to finally get to a multiclass prediction.

In this article, we focus on two similar but slightly different ones: one-vs-rest classification and one-vs-one classification. In other words, it is not possible to create a multiclass classification scenario with an SVM natively.įortunately, there are some methods for allowing SVMs to be used with multiclass classification.

Named after their method for learning a decision boundary, SVMs are binary classifiers – meaning that they only work with a 0/1 class scenario. Support Vector Machines (SVMs) are a class of Machine Learning algorithms that are used quite frequently these days.

0 kommentar(er)

0 kommentar(er)